or "Basic-Ass Machine Learning" if your boss isn't around

Try BAML: Prompt Fiddle • Examples • Example Source Code

5 minute quickstarts Python • Typescript • NextJS • Ruby • Others (Go, Java, C++, Rust, PHP, etc)

| What is BAML? | BAML is a new programming language for builing AI applications. |

| Do I need to write my whole app in BAML? | Nope, only the AI parts, you can then use BAML with any existing language of your choice! python, typescript, and more. |

| Is BAML stable? | We are still not 1.0, and we ship updates weekly. We rarely, if at all do breaking changes |

| Why a new language? | Jump to section |

| Why a lamb? | Baaaaa-ml. LAMB == BAML |

The fundamental building block in BAML is a function. Every prompt is a function that takes in parameters and returns a type.

function ChatAgent(message: Message[], tone: "happy" | "sad") -> string

Every function additionally defines which models it uses and what its prompt is.

function ChatAgent(message: Message[], tone: "happy" | "sad") -> StopTool | ReplyTool {

client "openai/gpt-4o-mini"

prompt #"

Be a {{ tone }} bot.

{{ ctx.output_format }}

{% for m in message %}

{{ _.role(m.role) }}

{{ m.content }}

{% endfor %}

"#

}

class Message {

role string

content string

}

class ReplyTool {

response string

}

class StopTool {

action "stop" @description(#"

when it might be a good time to end the conversation

"#)

}

Then in any language of your choice you can do the following:

from baml_client import b

from baml_client.types import Message, StopTool

messages = [Message(role="assistant", content="How can I help?")]

while True:

print(messages[-1].content)

user_reply = input()

messages.append(Message(role="user", content=user_reply))

tool = b.ChatAgent(messages, "happy")

if isinstance(tool, StopTool):

print("Goodbye!")

break

else:

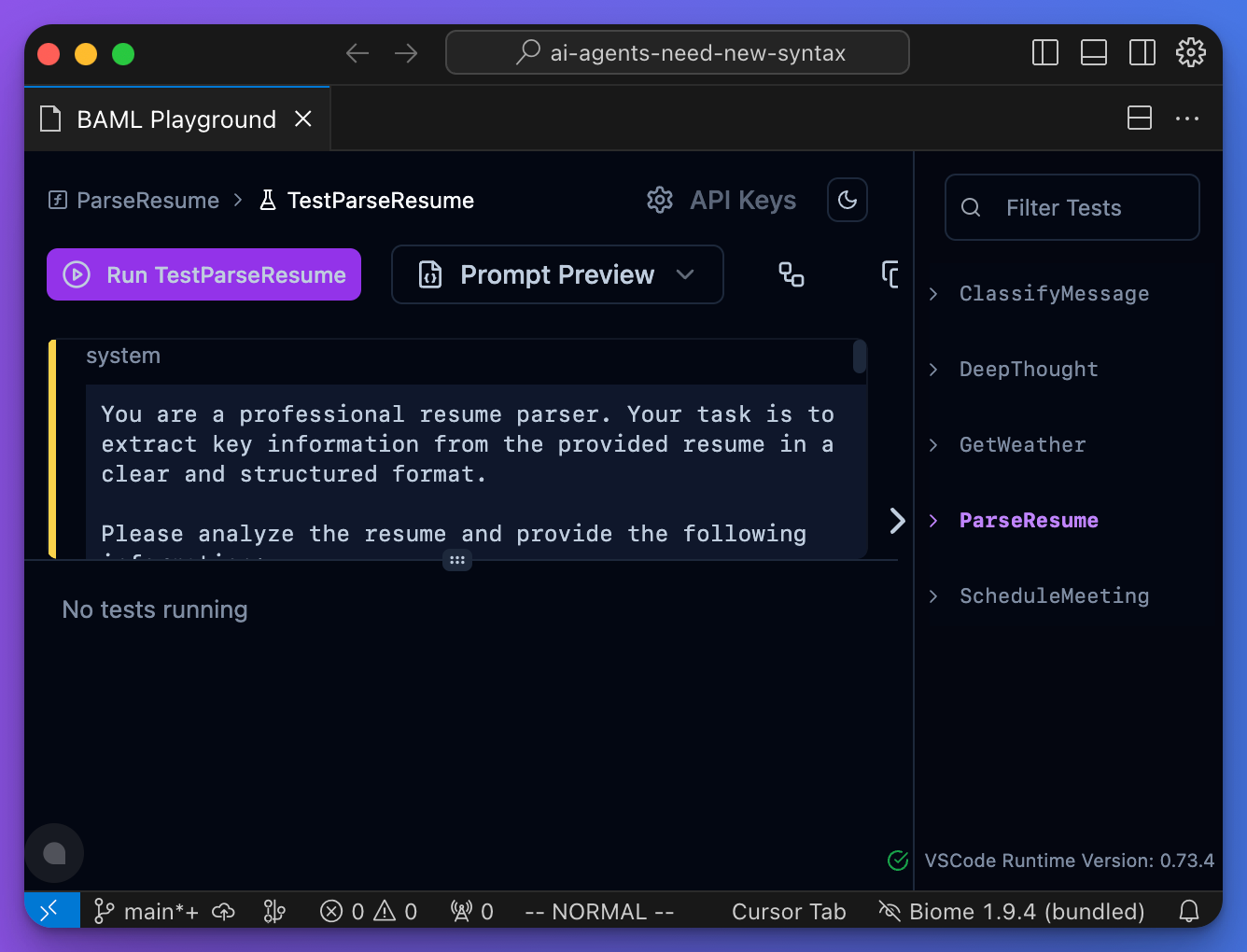

messages.append(Message(role="assistant", content=tool.reply))Since every prompt is a function, we can build tools to find every prompt you've written. But we've taken BAML one step further and built native tooling for VSCode (jetbrains + neovim coming soon).

- You can see the full prompt (including any multi-modal assets)

- You can see the exact network request we are making

- You can see every function you've ever written

It's just 1 line (ok, maybe 2). Docs

Retry policies • fallbacks • model rotations. All statically defined.

Want to do pick models at runtime? Check out Client Registry.

We currently support: OpenAI • Anthropic • Gemini • Vertex • Bedrock • Azure OpenAI • Anything OpenAI Compatible (Ollama, OpenRouter, VLLM, LMStudio, TogetherAI, and more)

Using AI is all about iteration speed.

If testing your pipeline takes 2 minutes, in 20 minutes, you can only test 10 ideas.

If testing your pipeline took 5 seconds, in 20 minutes, you can test 240 ideas.

Introducing testing, for prompts.

JSON is amazing for REST APIs, but way too strict and verbose for LLMs. LLMs need something flexible. We created the SAP (schema-aligned parsing) algorithm to support the flexible outputs LLMs can provide, like markdown within a json blob or chain-of-thought prior to answering.

SAP works with any model on day-1, without depending on tool-use or function-calling APIs.

To learn more about SAP you can read this post: Schema Aligned Parsing.

See it in action with: Deepseek-R1 and OpenAI O1.

Streaming is way harder than it should be. With our [python/typescript/ruby] generated code, streaming becomes natural and type-safe.

- 100% open-source (Apache 2)

- 100% private. AGI will not require an internet connection, neither will BAML

- No network requests beyond model calls you explicitly set

- Not stored or used for any training data

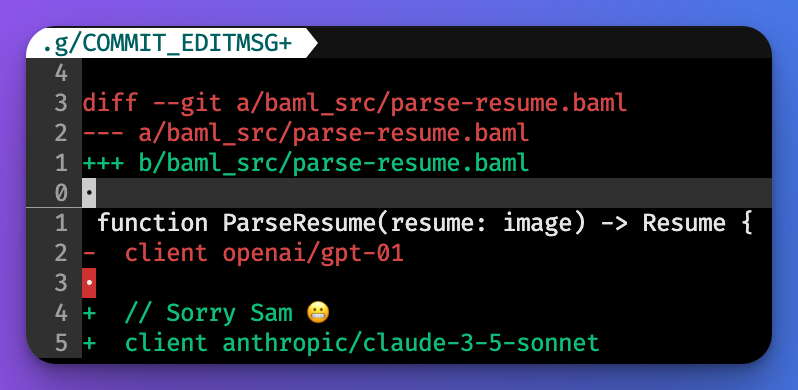

- BAML files can be saved locally on your machine and checked into Github for easy diffs.

- Built in Rust. So fast, you can't even tell its there.

Everything is fair game when making new syntax. If you can code it, it can be yours. This is our design philosophy to help restrict ideas:

- 1: Avoid invention when possible

- Yes, prompts need versioning — we have a great versioning tool: git

- Yes, you need to save prompts — we have a great storage tool: filesystems

- 2: Any file editor and any terminal should be enough to use it

- 3: Be fast

- 4: A first year university student should be able to understand it

We used to write websites like this:

def home():

return "<button onclick=\"() => alert(\\\"hello!\\\")\">Click</button>"And now we do this:

function Home() {

return <button onClick={() => setCount(prev => prev + 1)}>

{count} clicks!

</button>

}New syntax can be incredible at expressing new ideas. Plus the idea of mainting hundreds of f-strings for prompts kind of disgusts us 🤮. Strings are bad for maintable codebases. We prefer structured strings.

The goal of BAML is to give you the expressiveness of English, but the structure of code.

Full blog post by us.

As models get better, we'll continue expecting even more out of them. But what will never change is that we'll want a way to write maintainable code that uses those models. The current way we all just assemble strings is very reminiscent of the early days PHP/HTML soup in web development. We hope some of the ideas we shared today can make a tiny dent in helping us all shape the way we all code tomorrow.

Checkout our guide on getting started

Made with ❤️ by Boundary

HQ in Seattle, WA

P.S. We're hiring for software engineers that love rust. Email us or reach out on discord!