Toy use case on using Snowflake as a full end-to-end ML platform.

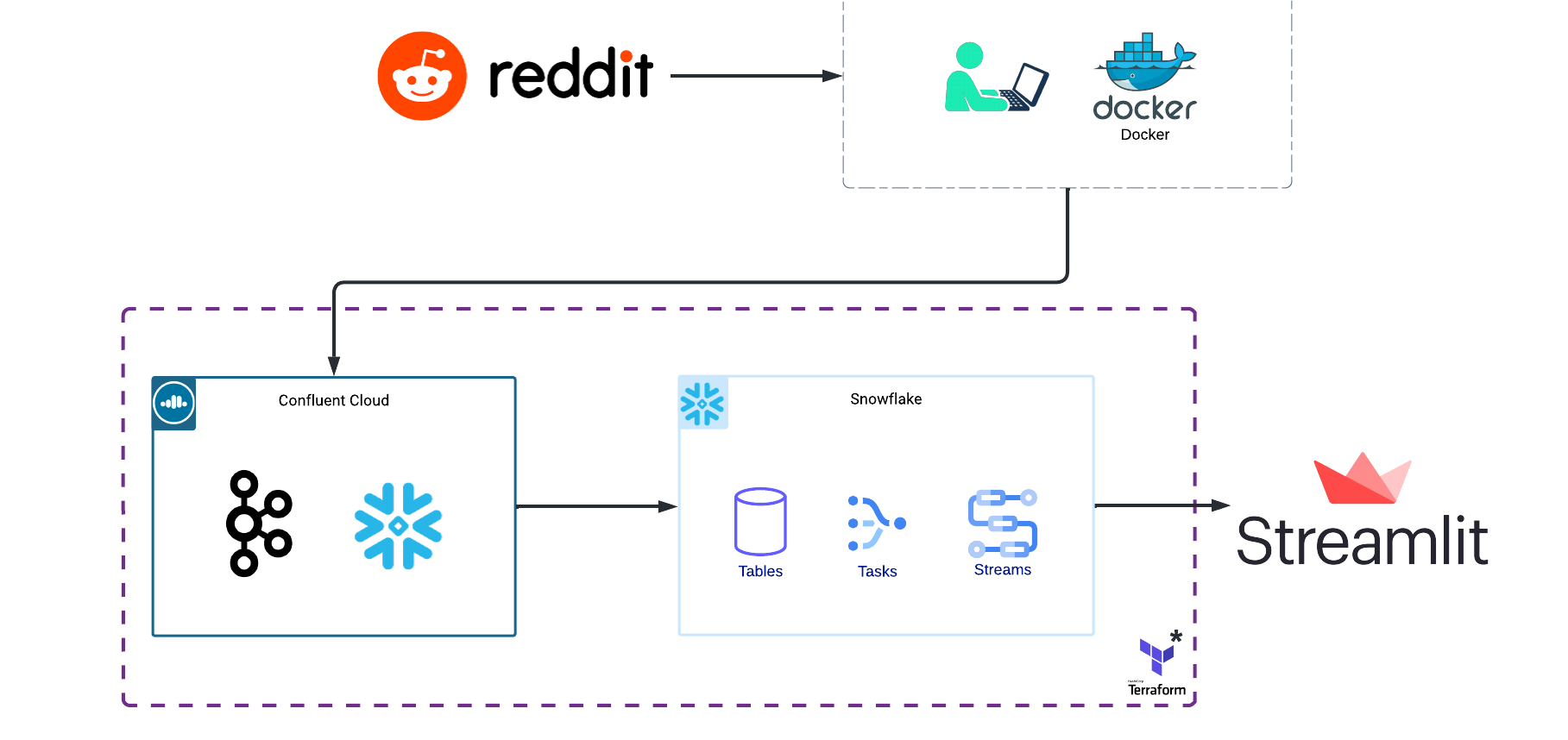

- Terraform-managed infrastructure

- Snowflake

- Python integration via Snowpark Python

- Tasks

- Streams

- Materialized Views

- Streamlit dashboard application

- CI/CD via GitHub Actions

- Display model metrics via CML

- Update Snowflake UDFs

- Tag versions

To get started, whether you want to contribute or run any applications, first clone the repo and install the dependencies.

git clone [email protected]:datarootsio/snowflake-ml.git

cd snowflake-ml

pip install poetry==1.1.2 # optional, install poetry if needed

poetry install

pre-commit install # optional, though recommended - install pre-commit hookspoetry run streamlit run dashboard/👋_hello.pyThe app is consists of

- Local Apache Kafka connector

- Apache Kafka cluster on Confluent Cloud

- Snowflake data warehouse

- Streamlit app (ran locally)

Where both Confluent Cloud and Snowflake infrastructure are managed by Terraform.

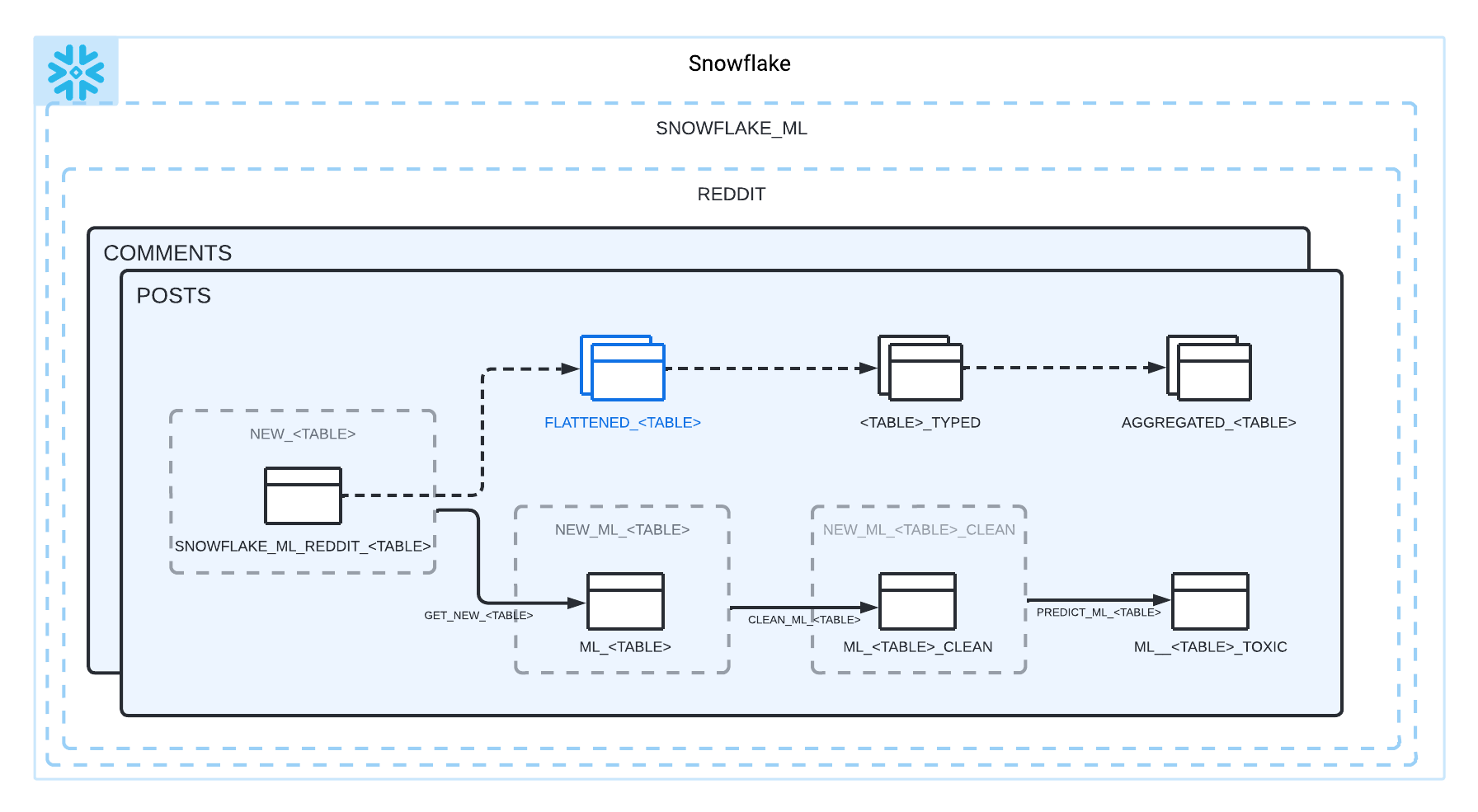

Taking a closer look in Snowflake, we have the landing tables that are updated in real time via Confluent Cloud's Snowflake Connector and Snowpipe. From there the data is transformed via views and materialized views to get aggregate statistics. Alternatively we use Snowflake's streams, tasks and python UDFs to transform the data using machine learning and store the predictions on a table that is ingested by Streamlit.

This project is maintained by dataroots.For any questions, contact us at [email protected] 🚀